Is rendering like painting?

Every day brings a new deluge of images to scroll through. Some come with contextualizing information, others are absorbed into the homogeneity of the media feed. In the wake of these image–text assemblages, it seems to me that computer-generated images require their own medium-specific ways of grappling with the question of representation. Given that their process of production is rendered completely opaque by the resulting image, it is necessary to demystify the labour hidden beneath the images’ surfaces. Without means to distinguish photoreal from photographic, they circulate as invisible images. By borrowing from more traditional artistic disciplines like painting, sculpture, and theatre, we can find ways to open up the artistic potential of computer-generated imagery and consider photoreal rendering’s relationship to reality.

The most obvious analogue to rendering may be photography—after all, the final product of a render is an image. Photorealism, first and foremost, necessitates fidelity to the way reality looks through a camera. A camera can record what otherwise passes by as a moment; it objectifies spatio-temporal experience. In almost every way photoreal rendering mimics our optical experience of reality. For instance, global illumination algorithms ensure that realistic light simulation is inherent to the process, even if other content is stylized. But verisimilitude is not simply achieved by copying the behaviour of light; it is more convincing when an image also acknowledges its own mediation. Therefore compositing effects can further replicate the mechanics of photographic representation, adding barrel distortion, chromatic aberration, and bokeh to make the rendering look like it originated from a camera. Ultimately a photograph is indexed to material reality through the distortions of the lens or grain of the film, producing signature effects like lens flares. In a rendering, such indexical marks are reproduced iconographically. Once the camera is set and the scene is ready, it takes time to calculate the interactions between light sources and reflective surfaces. I think of it like developing film. But there is an entire process of modelling, texturing, and compositing that occurs prior to outputting the final image. And it is in this process that I find rendering to be as materialistic as sculpture, as imaginative as painting, and as interpretive as theatre. As the hegemony of the camera fades with time, so too will new indexical marks be privileged—perhaps the materialist indexes of the rendering itself will be seen as a kind of realism. Given both the breadth and depth of possibilities available to rendering, it seems pertinent to address what links it maintains between art and the real.

First, it is important to consider the representational paradigms in which an artwork circulates: how can it be understood, how is it informed by its process of production? What undergirds the symbol, and what empowers the document to represent reality? These questions are fundamental to understanding rendering, a process of making images that look very much like things they are not. The meaningfulness of these images is found in the obscured aspects of production as much as in their visible surface.

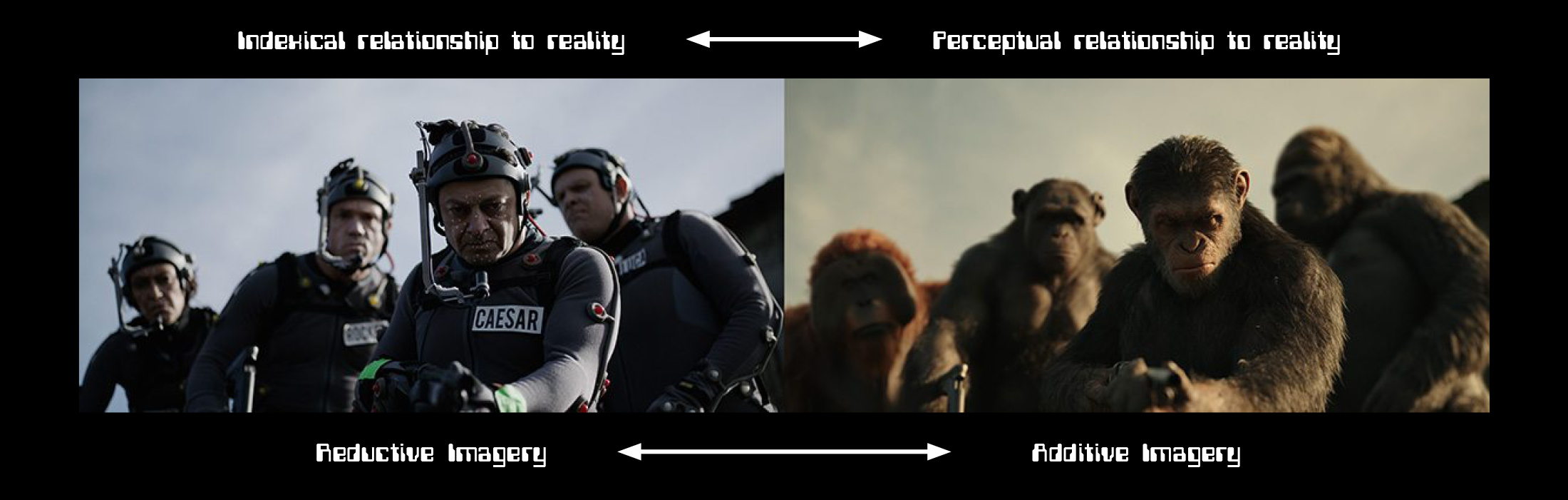

Media theorist Lev Manovich argues that computer-generated images and cinematic visual effects have more in common with hand-drawn animation than they do with photographically-captured images.[1] In order to distinguish these two kinds of images—which may end up looking exactly alike, photoreal and photographic the same—I have elsewhere put forth the notion of additive and reductive images.[2] Additive images are constructed, every aspect of them is generated. Contrast this with reductive processes that use photographic technologies to ‘capture’ images from real life. In this case, reductive images are taken from a source; they refer to some existing set of circumstances that can be seen with a camera. Additive images do not use cameras, at least not directly; instead they are painted or otherwise assembled from the imagination. Reductive images begin with a specific moment or scene, and the resulting photograph can then be edited or retouched in a variety of ways, but its source remains locatable in history. By framing a moment, reductive images narrow in on the plethora of activity that categorizes reality to extract a singular viewpoint. Additive images operate the other way around. The image output is the result of a very long process of assemblage and manipulation, and it is its completion rather than its derivation that marks its place in historical time. Together, additive and reductive images form a spectrum: some images begin with a camera snap but use software to add in additional elements while others may use photographically-derived textures on virtual geometry in a digital model. Most images incorporate both additive and reductive processes—very rarely do we encounter an image that was photographed without computer editing. But it is useful to be able to indicate what kinds of processes resulted in what kind of image.

In this sense, rendering belongs to a trajectory of painting where perceptual rather than indexical interpretations of the real reign supreme. That is to say, the representation does not occur by tracing or marking the real. Rendering artists simulate light effects in order to imply materiality, action, and depth. Like painting, the rendering can be said to be entirely deliberate because every element that comprises the final image had to be manually inserted into it. The output operates on the basis of perception, not limited by physical laws, chemical processes, or traces of actual events. Instead, its realism comes from its capacity to evoke mimetic or symbolic interpretations of the real.

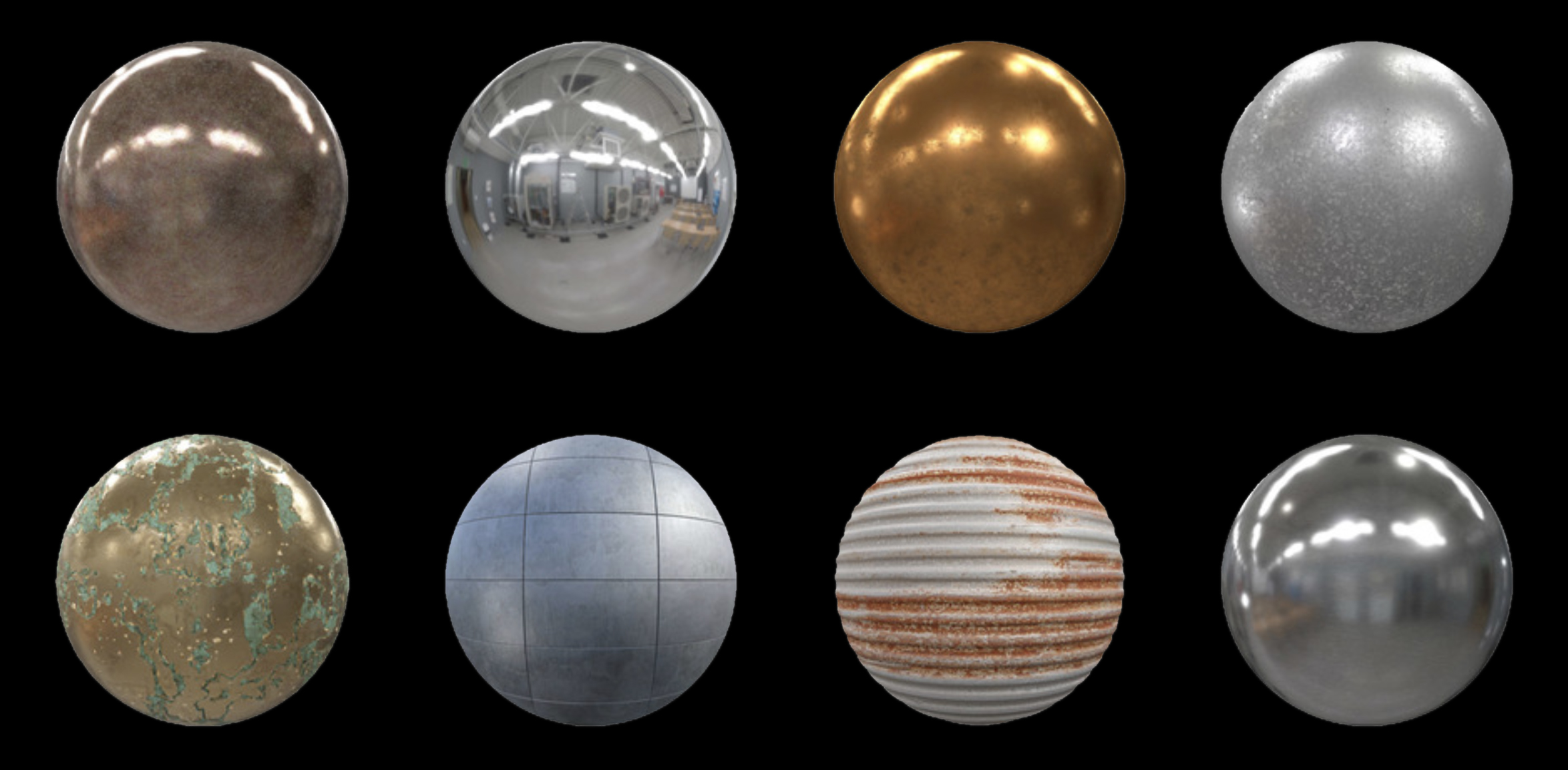

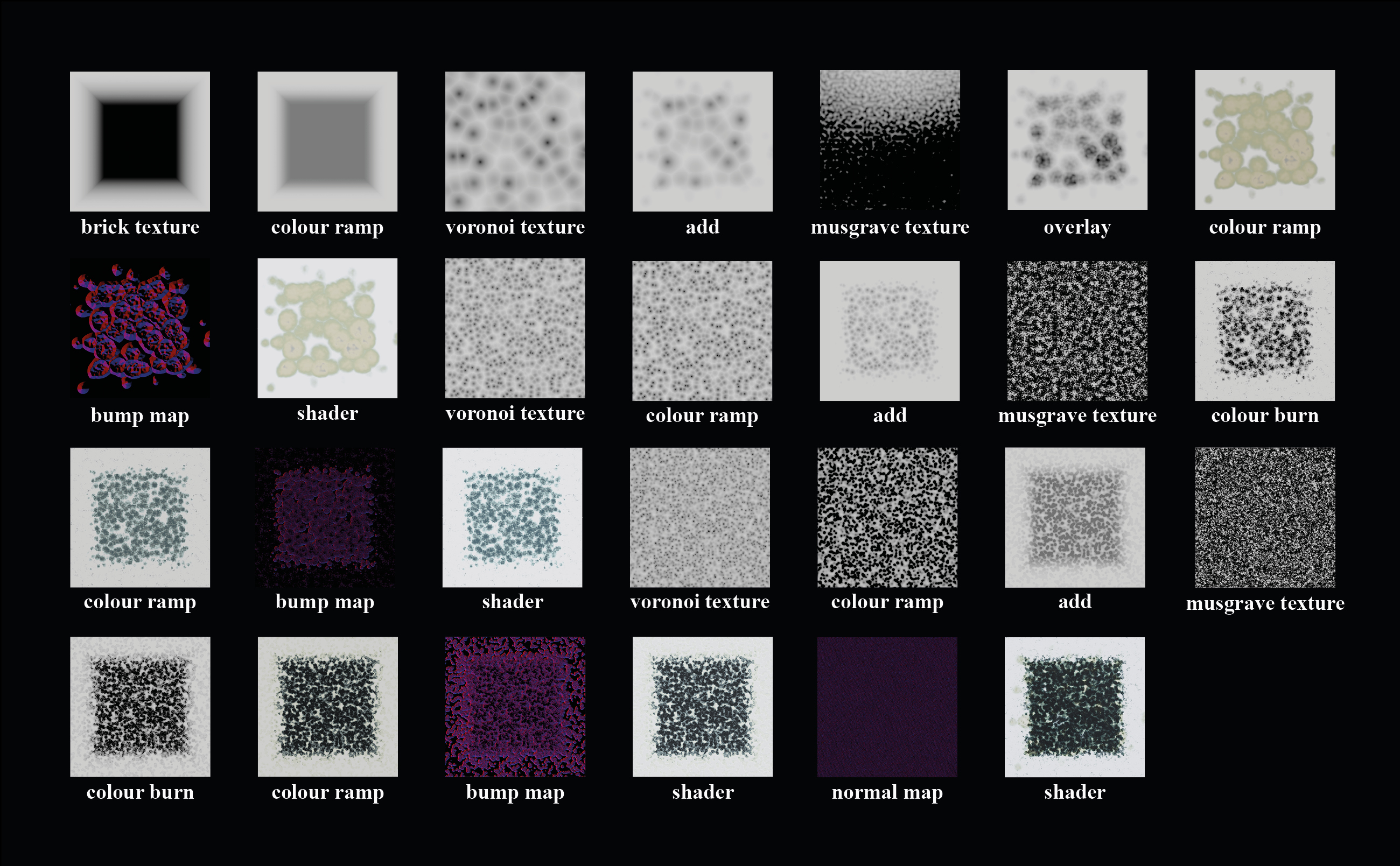

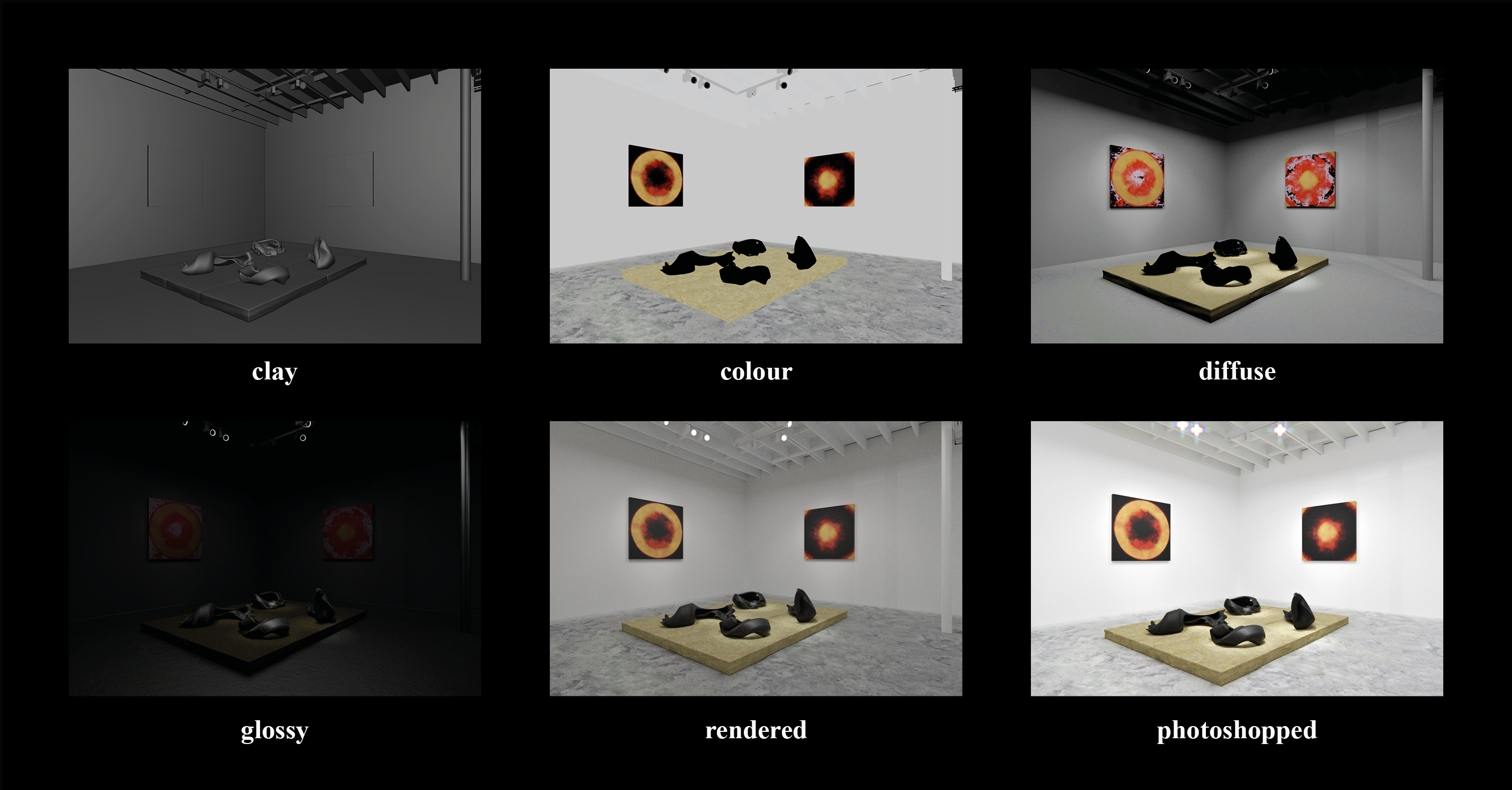

The process of shading—assigning an object’s surface qualities, materials, and textures—is perhaps the most akin to painting. Where painters build up layers of paint to produce the desired surface, renderers use a variety of shader nodes to route various image layers (called maps) together. Diffuse maps determine the colour of a surface, roughness maps affect its reflectivity, and bump maps are used to give a sense of micro-detail to a surface. These maps are used in conjunction with surface imperfections, muddying the glossiness of a surface with smudges or scratches, implying a history of wear and tear. These maps may be derived from photoscanned elements—neutral, documentary shots of detailed textures—or they may be procedurally generated by layering different kinds of shader nodes. Procedural textures tend to make use of mathematical functions, whereby colour is assigned a numerical value (0 for black, 1 for white, etc.) and these values are multiplied or graphically plotted to produce masks and patterns. Any of these elements may be applied by digitally painting on the surface of a model—a vast array of brush types exists for these purposes. But it is the way we look at the final rendering that may most closely mimic how we look at a painting. With the knowledge that it is in fact a rendering and not a photograph, we scan the images for clues of its construction, signs of ‘the artist’s hand’, or evidence that distinguishes it from a real photo and reveals intent. Simultaneously, we are aware that nothing is depicted that is not constructed. Every element of the image therefore contains potential meaning, every aspect has a reason for its inclusion.

And yet, there is so much more to a rendering than shading. The earliest computer-generated images were raw geometry—it was the shapes and their movement that defined an image. In this sense we can grasp some sculptural analogies within the rendering process. Indeed, sculpting is the name given to a kind of free-form modelling present in many rendering softwares. But most renders begin with primitive shapes (cubes, spheres, toruses) and use these to build up all elements in a scene. These shapes are refined in order to become a vast array of objects. In early renderings, like first-generation 3D video games, these objects functioned iconically—they roughly represented their source materials, using a limited number of faces and vertices to mimic the basic profile of the intended objects. In order to produce photoreal models, an artist will have to research how the objects are produced, why they look the way they do in real life, and what unseen factors were influential on the final design. It is a deeply material process, requiring close attention to otherwise banal qualities. The interrelationship of objects in a scene gives them a kind of weight or physicality—all implied through the flat imagery of the final render. But unlike images they can be navigated around, seen from multiple perspectives; they, like sculpture, surround the viewer. In the final rendering, it is a combination of geometry and surface shading that gives an object its object-ness. As a viewer, it becomes important to consider the symbolism of each object in relation to each other—it is not necessarily the surface that gives pause for artistic contemplation—but rather what each object’s materials are, where they are positioned, and how they operate together. For those who stumble across a photoreal rendering without knowledge to distinguish it from a photograph, it is the objects and sculptural qualities that will appear foremost in the image. The image will appear to be of these constituents—documentation of objects in a scene—and so they will bear the brunt of scrutiny.

However, the rendering’s relation to sculpture is ultimately metaphorical. Whether for a still image or video animation, the virtual geometry is mediated by the flat screen. As viewers, we are asked to contemplate the rendering at somewhat of a distance—it is not a photo, not a painting, not comprising sculptural objects with real histories of incidental wear and tear. So, we may ask, is rendering like conceptual art? Are we meant to remain within the virtual space of speculative possibility: does the fiction of the object really matter to the contemplation it inspires? Like conceptual art, rendering tends to use bureaucratic or administrative aesthetics and verbs, even if only below the surface. Indeed, much commercial rendering serves as a kind of concept art, a pre-visualization of a product to come. These resulting images are speculative propositions unrooted in any specific moment or place, instead consisting of symbols and icons, referring to abstract concepts that might not possess physical indexes. Therefore the rendering’s primary concerns are not sensual but conceptual. In film, computer-generated imagery is used to advance the concept of a narrative, building a world in which a story and its constituent thematic concerns can unfold. Even at the outskirts of photorealism and beyond the suspension of disbelief, these images hold a power to reflect abstract, emotional, or infrastructural aspects of reality. Whether or not they exist as depicted is secondary to the way these images paint a picture of an often-illegible real. Such depictions become further incorporated into the real—not reductively, as a photograph of a specific place in time, but additively, as a precedent for future responses.

Above, I noted that the final render can be said to be entirely deliberate. This is true in a certain sense, but only half-true in another. All aspects, tools, and technologies that make up the rendering process are produced by human rather than ‘natural’ means—but no single individual can hold all of these complexities at once. Instead, a digital artist builds on the work of many other technical artists. This produces a new ‘natural’ sphere in which the renderer operates. Some tediously-refined elements become raw materials in the hands of another. It is very much possible for these materials to turn on the artist, to produce happy accidents, to misbehave and steer the work in an unforeseen direction. In this sense, we can think of the whole process as something like theatre—where there is an emphasis on choreography or rehearsal rather than strictly embodied direction. For instance, in physics simulations, the artist cedes control to computer algorithms that calculate fluid dynamics, or muscle and fat micro-movements. These are played back many times, refined with each subsequent iteration. It is no coincidence that both performances and computers make use of scripting to achieve their final products. A large degree of choreographic interpretation exists between the initial artistic vision and final output.

It becomes fascinating to consider how photorealism may find itself in a lineage of realist and anti-realist theatre traditions—like Antonin Artaud’s surrealism in which the mise-en-scène threatens to overwhelm the narrative, or Bertolt Brecht’s antagonistic realism that attends to the social infrastructures that enable representation. Because of its additive production process, rendering always depicts reality with attention to the formal tropes that mediate and represent reality. These mimetic capacities find their power when deployed not merely to confuse what is real and what is fabrication, but when they delineate the means by which our reality is itself constructed.

–February 2021

Notes